Building a machine learning pipeline is an essential aspect of creating scalable, reliable, and efficient machine learning models. A well-designed pipeline not only ensures that each stage of the machine learning process is automated, but also ensures that your model performs optimally from data ingestion to final deployment. Pipelines streamline the development process, allowing data scientists to move swiftly from experimentation to production. If you’re looking to build a machine learning pipeline from scratch, this guide will walk you through every step, from data acquisition to monitoring the deployed model.

Understanding the components, strategies, and challenges of machine learning pipelines is key to mastering the craft of building robust models. By the end of this article, you’ll have a clear understanding of how to structure a machine learning pipeline from start to finish.

Importance of Building a Pipeline from Scratch

Building a machine learning pipeline from scratch is a powerful way to understand the intricacies of model development and deployment. This hands-on approach provides deeper insight into each phase, such as data preprocessing, model validation, and deployment. It also fosters flexibility. When you build a pipeline from the ground up, you have complete control over how each stage is handled, allowing you to tailor the pipeline to your specific project needs.

Moreover, building a pipeline yourself helps in understanding the potential pitfalls and trade-offs involved in various stages of the machine learning workflow. For instance, choosing the right algorithms, optimizing for computational efficiency, and ensuring smooth integration with other systems becomes second nature when you have mastered pipeline construction.

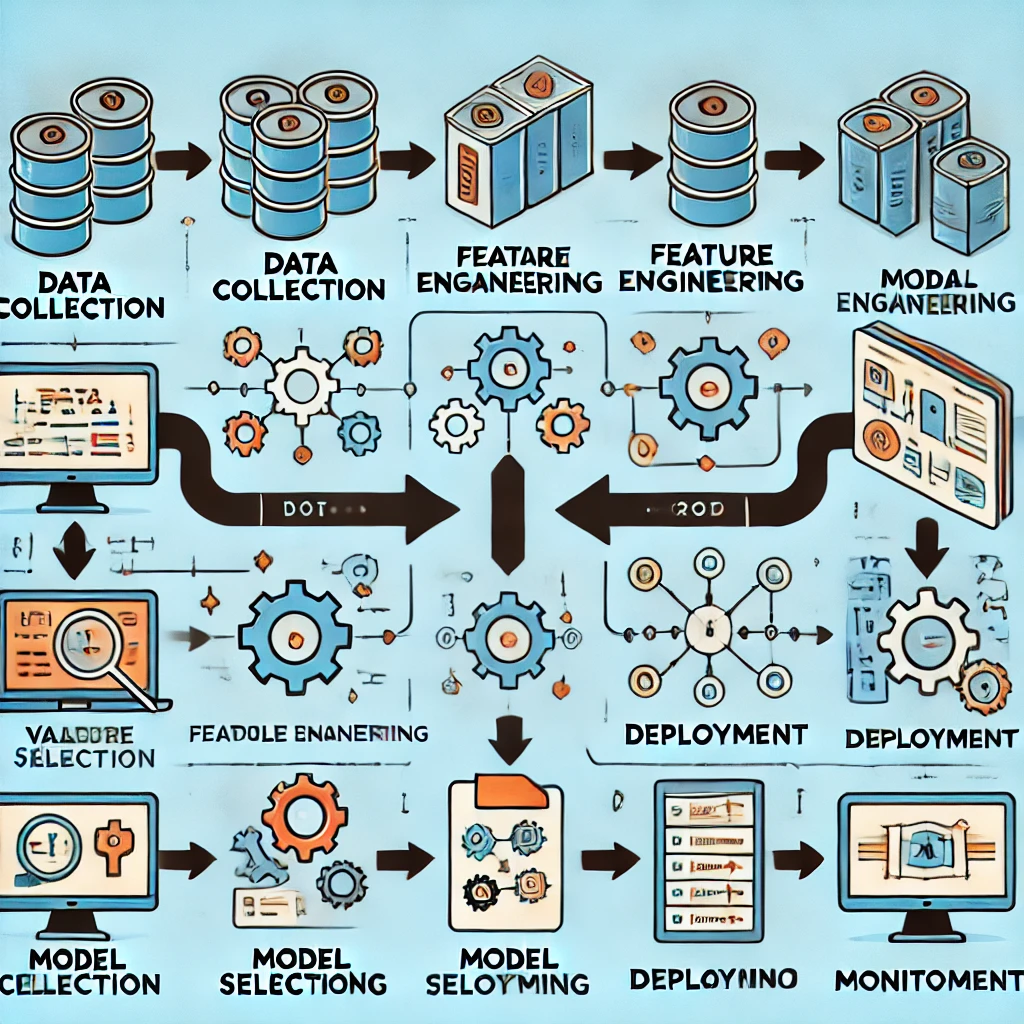

Components of a Machine Learning Pipeline

A machine learning pipeline is composed of several key components that ensure the seamless flow of data from raw form to actionable insights. These components typically include:

- Data Collection: Gathering raw data from various sources like databases, APIs, or web scraping.

- Data Preprocessing: Cleaning and preparing data for analysis by handling missing values, normalizing data, etc.

- Feature Engineering: Creating new features from existing data to improve model performance.

- Model Selection: Choosing the right algorithm based on the data type and problem (e.g., regression, classification).

- Training: Feeding the preprocessed data into the algorithm to create the model.

- Validation: Assessing the model’s performance using validation techniques like cross-validation.

- Testing: Evaluating the model on a hold-out test dataset to ensure generalization.

- Deployment: Moving the trained model to a production environment for real-world data predictions.

- Monitoring: Continuously tracking the model’s performance in production to address issues like model drift.

Each of these components plays a pivotal role in the lifecycle of a machine learning model, and ensuring they are interconnected efficiently is what makes a pipeline truly effective.

Step 1: Define the Problem Statement

Every machine learning project starts with a well-defined problem statement. This step is crucial as it lays the foundation for the entire pipeline. A clear and concise problem statement helps in framing the objective, choosing the appropriate model, and setting the evaluation metrics.

For example, if you’re trying to predict customer churn for a subscription service, the problem statement could be framed as: “How can we predict which customers are likely to cancel their subscription in the next 3 months based on their past behavior?”

This step helps in aligning the pipeline’s components towards a clear goal and ensures that the data you collect and preprocess is relevant to the problem you’re solving.

Step 2: Data Collection and Acquisition

Data collection is the lifeblood of any machine learning pipeline. The quality and quantity of data significantly impact the performance of your model. Data can be gathered from various sources depending on the problem you’re trying to solve. For instance, if you’re working on a customer churn prediction model, you might collect data from transaction logs, customer profiles, and interaction histories.

It’s essential to ensure the data is diverse, representative, and clean from the outset. In this phase, you may need to use APIs, databases, or even web scraping techniques to gather the necessary datasets. You should also make sure the data adheres to any applicable privacy laws (e.g., GDPR, HIPAA) before using it in your pipeline.

Step 3: Data Cleaning and Preprocessing

Once data is collected, it’s time to clean and preprocess it. Raw data is often incomplete, noisy, or irrelevant to the problem. Data cleaning involves removing duplicates, handling missing values, and correcting inaccuracies. Preprocessing steps, such as normalization, encoding categorical variables, and dealing with outliers, ensure that the data is in the best shape for the model to learn from.

For instance, if you’re working with categorical data like customer demographics, you’ll need to convert those categorical values into numerical formats using techniques like one-hot encoding or label encoding.

Step 4: Feature Engineering

Feature engineering is the process of creating new features from raw data to improve model accuracy. This step allows you to transform your dataset in a way that makes patterns more apparent to machine learning algorithms. Common feature engineering techniques include:

- Scaling: Normalizing data to ensure all features contribute equally to the model.

- Polynomial Features: Creating interaction terms or polynomial terms to capture non-linear relationships.

- Dimensionality Reduction: Using techniques like PCA (Principal Component Analysis) to reduce the number of features without losing essential information.

Effective feature engineering often makes the difference between a mediocre model and an outstanding one.

You can also read: How to Improve AI Accuracy with Data Preprocessing Techniques

Step 5: Data Splitting

Once your data is clean and transformed, it’s crucial to split it into different subsets. Typically, datasets are split into three parts:

- Training Set: Used to train the model.

- Validation Set: Used for tuning hyperparameters and preventing overfitting.

- Test Set: A holdout set to evaluate the final model’s performance on unseen data.

A common split is 70% for training, 15% for validation, and 15% for testing, but this can vary based on the size of the dataset and the problem at hand.