In the world of AI, the phrase “garbage in, garbage out” encapsulates the importance of data quality. Even the most advanced AI algorithms cannot compensate for poor-quality data. The key to high-performing AI models lies in how well the input data is processed before feeding it into the model. Data preprocessing is a crucial step that can lead to better generalization and more accurate predictions, directly influencing the overall accuracy of AI systems.

This article will dive deep into essential data preprocessing techniques, showcasing the practical approaches you can take to fine-tune your AI’s accuracy. From handling missing data to normalizing data points, each technique has a profound impact on the end result. Let’s examine these methods in detail.

Understanding Data Preprocessing in AI

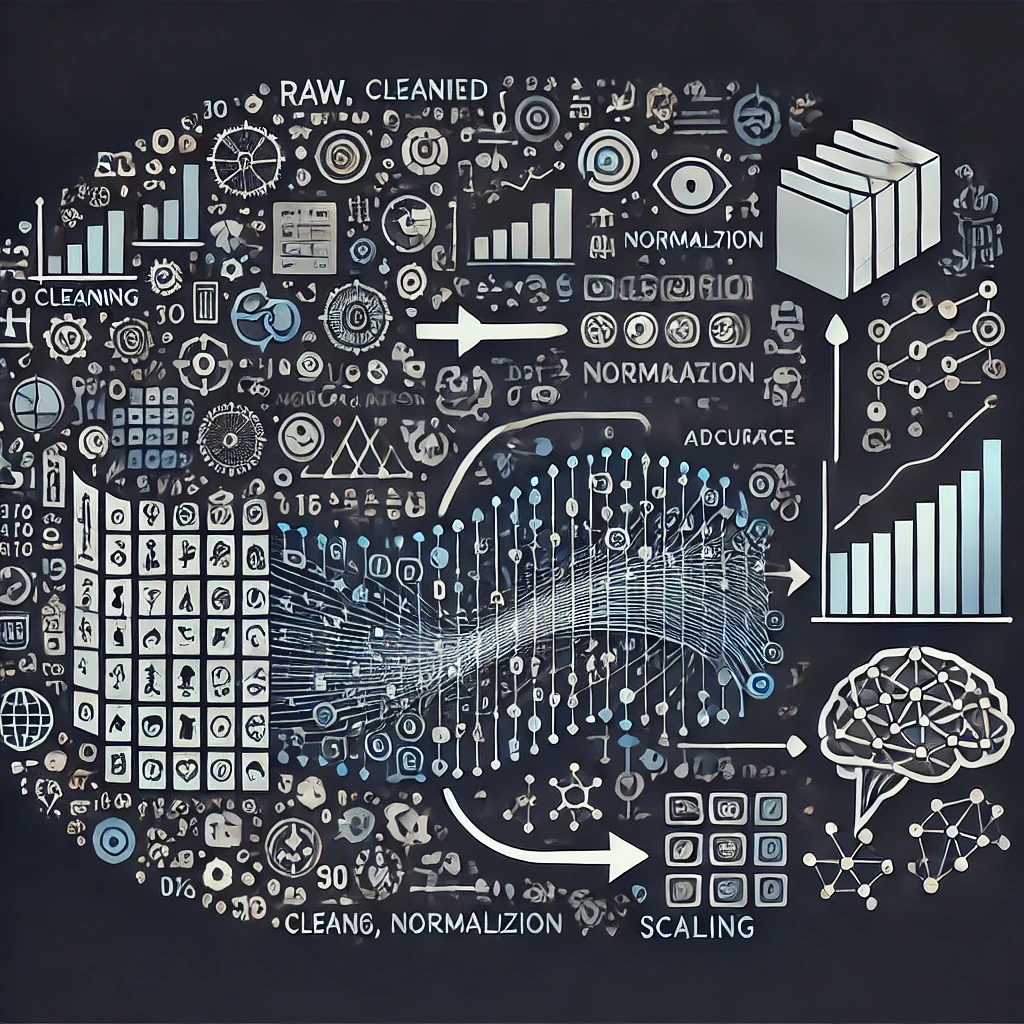

Data preprocessing is the technique of transforming raw data into a clean and usable format for AI algorithms. Since most datasets contain noise, inconsistencies, and missing information, preprocessing steps ensure that the data becomes more meaningful and compatible with AI models.

By implementing proper preprocessing strategies, you not only improve the training process but also prevent overfitting and underfitting, which are common problems that affect model accuracy. Preprocessing helps the model focus on the most relevant features, reducing the impact of irrelevant or redundant information.

Why Is Data Preprocessing Crucial for AI Models?

Preprocessing serves as the backbone for accurate predictions because AI models rely on patterns within data. If these patterns are obscured by noise, missing values, or outliers, the AI’s learning process becomes difficult. Moreover, unprocessed data may introduce bias, leading to skewed results that can be dangerous in sensitive applications like healthcare or finance. Preprocessing techniques enable the model to learn effectively by presenting clean, organized, and well-prepared data, thus enhancing its predictive capabilities.

Cleaning Data for Enhanced Accuracy

Cleaning data is the first and foremost step in preprocessing. Raw data often contains inconsistencies that can degrade AI accuracy. These inconsistencies include missing values, outliers, and duplicate records. Addressing these issues directly improves model performance.

Handling Missing Data

In almost every dataset, some values are missing. There are several methods to handle missing data:

- Removal of records: If the number of missing values is minimal, removing those records is often the simplest approach.

- Imputation: For more extensive gaps, mean, median, or mode imputation methods can fill in missing values based on the rest of the dataset. For categorical variables, the most frequent category can replace missing entries.

- Advanced techniques: More sophisticated methods like K-Nearest Neighbors (KNN) or predictive modeling can fill in missing data points, taking into account the relationships between other variables.

Proper handling of missing data ensures that the model doesn’t interpret incomplete records incorrectly, improving the quality of input data and the model’s overall accuracy.

Dealing with Outliers

Outliers are extreme data points that differ significantly from the rest of the dataset. These anomalies can distort the learning process and affect AI accuracy. Common techniques for handling outliers include:

- Z-score: Identifies outliers by measuring how far a data point is from the mean.

- IQR (Interquartile Range): Detects outliers by analyzing the spread of the central 50% of the data.

- Capping or removal: Depending on the dataset, you can either cap extreme values to a threshold or remove them altogether.

Removing or adjusting outliers ensures that the model learns from typical data points, rather than being misled by aberrant values.

Managing Duplicates

Duplicate records in datasets can introduce unnecessary bias into the model. Removing duplicates ensures that the model isn’t trained multiple times on the same observation, which could skew predictions. De-duplicating records before training keeps the dataset clean and balanced.

Feature Engineering for Better Predictions

Feature engineering is the process of transforming raw data into informative features that help the AI model learn better. Thoughtfully crafted features can significantly enhance AI accuracy by revealing relationships and patterns in the data that the model might otherwise overlook.

Feature Scaling

Many AI models, particularly distance-based algorithms like k-means clustering and Support Vector Machines (SVM), are sensitive to the scale of data. Feature scaling ensures that each feature contributes equally to the model’s learning process. The two most common scaling techniques are:

- Normalization: Rescales all features to a range between 0 and 1.

- Standardization: Adjusts the data to have a mean of 0 and a standard deviation of 1, making it easier for models to converge during training.

By scaling features, you ensure that no single feature disproportionately influences the model’s performance.

Feature Encoding

For categorical data, it’s important to convert categories into numerical formats since most AI algorithms work best with numbers. Common techniques for encoding categorical variables include:

- Label encoding: Assigns a unique integer to each category.

- One-hot encoding: Converts categories into binary vectors, where each column represents a category.

Choosing the right encoding method depends on the relationship between categories. If there is an ordinal relationship (e.g., education levels), label encoding is effective. If the categories are nominal (e.g., color), one-hot encoding ensures there’s no implicit ranking.

Feature Selection

Reducing the number of features in a dataset can prevent overfitting and improve model generalization. Feature selection methods aim to retain only the most relevant features by:

- Correlation analysis: Identifying and removing highly correlated features.

- Recursive feature elimination (RFE): Iteratively removing the least important features.

- Principal Component Analysis (PCA): A dimensionality reduction technique that combines correlated features into new components.

By selecting only the most impactful features, you reduce the complexity of the model while maintaining or improving its accuracy.

Normalization and Standardization: Key Techniques for Model Performance

Data normalization and standardization are two critical preprocessing techniques that bring numerical data into a comparable range. These techniques help improve model accuracy, especially in algorithms sensitive to data scale.

Normalization: Rescaling Data Between 0 and 1

Normalization brings all features into a range between 0 and 1. This technique is especially useful when the data varies widely in magnitude (e.g., income vs. age). Normalization ensures that larger values do not dominate the learning process. For example, if one feature has values in the thousands while others range from 0 to 10, the AI model might give disproportionate importance to the larger feature.

Standardization: Mean of 0 and Standard Deviation of 1

Standardization transforms data into a distribution where the mean is 0, and the standard deviation is 1. This approach is more appropriate for datasets that follow a normal distribution. Standardization can stabilize the learning process for models like Logistic Regression or SVM, ensuring faster convergence and improved accuracy.

Both normalization and standardization are essential preprocessing techniques that directly contribute to better model training and more accurate predictions.

Dealing with Imbalanced Data

Imbalanced data occurs when one class in a dataset significantly outnumbers others, leading to biased models that favor the majority class. This issue is particularly common in classification tasks, such as fraud detection or medical diagnosis.

Techniques to Handle Imbalanced Data

- Resampling: Adjusting the dataset by either oversampling the minority class or undersampling the majority class. SMOTE (Synthetic Minority Over-sampling Technique) is a popular method for generating synthetic examples of the minority class.

- Class weighting: Most machine learning frameworks offer options to assign higher weights to minority classes, ensuring the model pays more attention to underrepresented categories.

- Anomaly detection: Treat the minority class as anomalies and use specialized techniques to detect them more effectively.

By addressing class imbalances, you ensure that your AI model can detect rare but important outcomes, improving its real-world utility and accuracy.

You can also read: How to Train Machine Learning Models: A Step-by-Step Guide

Optimizing AI Accuracy with Data Preprocessing

The quality of data is critical to improving the accuracy of AI models. Through proper data preprocessing techniques such as data cleaning, feature engineering, normalization, and handling imbalanced data, you can significantly boost the performance of your AI system. The accuracy of AI doesn’t just rely on advanced algorithms—it starts with how well the data is prepared. As the saying goes, “Good data makes for good AI.”

By implementing these preprocessing steps, you can ensure your AI models are not only accurate but also robust, capable of making meaningful predictions in real-world applications.