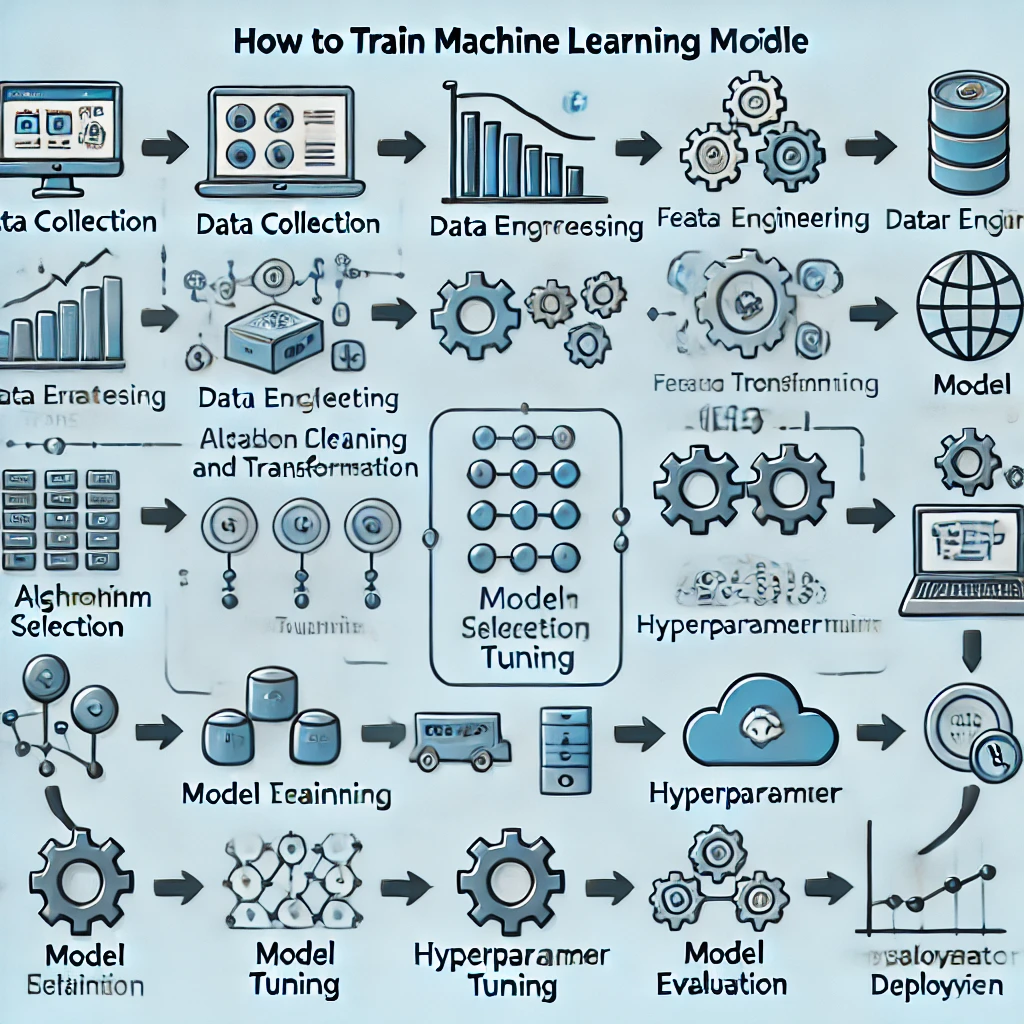

Training machine learning models is an essential part of developing artificial intelligence systems that can make predictions, classify data, or even learn complex tasks on their own. This process requires careful preparation, detailed steps, and the right tools. In this guide, we will walk through every stage of training a machine learning model, from choosing the data to deploying the final model, ensuring that each aspect of the training is done correctly and efficiently.

What is Machine Learning?

Machine learning is a branch of artificial intelligence (AI) that enables systems to learn from data rather than being explicitly programmed. Essentially, it uses algorithms that can identify patterns in datasets and improve predictions or decisions based on experience. For example, when you use an app that recommends movies or music, or when your email filters out spam, machine learning is at work. It’s becoming a cornerstone in various fields, including healthcare, finance, marketing, and autonomous systems.

Machine learning can be broadly classified into supervised, unsupervised, and reinforcement learning, each serving distinct purposes based on the nature of the problem and the data at hand.

The Importance of Training Machine Learning Models

Training machine learning models is where the magic happens. It’s during this phase that the system actually “learns” how to make decisions. Well-trained models form the backbone of effective AI applications, and without the proper training process, even the most sophisticated algorithms can fail.

If a model is not trained adequately, it may result in inaccurate predictions, wasted computational resources, or worse—real-world mistakes that could lead to severe consequences (e.g., misdiagnosis in healthcare systems or faulty risk assessments in finance). Proper training ensures that the model generalizes well on unseen data, striking the perfect balance between bias and variance.

Prerequisites for Training a Machine Learning Model

Before embarking on model training, there are several prerequisites you must meet to ensure success. First and foremost is a solid understanding of machine learning principles, statistics, and algorithms. A background in Python, R, or other programming languages commonly used for machine learning is also crucial.

In addition, you will need access to tools such as:

- Python libraries like Scikit-learn, TensorFlow, or PyTorch

- A robust dataset relevant to the problem you’re solving

- A development environment like Jupyter Notebooks or Google Colab for writing and running your code

- A strong understanding of data structures and database management systems

Choosing the Right Data

Data is the lifeblood of machine learning. Without high-quality data, even the best algorithms will fail to perform. Choosing the right dataset is critical for the accuracy of the model, and the first step in the training process is acquiring a dataset that represents the problem domain.

When choosing data, keep the following in mind:

- Relevance: Ensure the dataset reflects the kind of task you are trying to automate or predict.

- Quantity: The more data, the better—but there must be a balance to avoid overfitting or underfitting.

- Quality: Data should be accurate, clean, and free from significant gaps or errors.

Datasets can be obtained from various sources, such as public repositories, company databases, or by scraping the web. However, the quality of the data is paramount, which leads us to the next step: data preprocessing.

Data Preprocessing: Cleaning and Transformation

Real-world data is messy. It often contains missing values, errors, and outliers that can negatively impact the model’s performance. Data preprocessing is the step where raw data is transformed into a usable format for the model.

The primary tasks in data preprocessing include:

- Handling Missing Data: Techniques like filling missing values using mean, median, or mode imputation, or simply removing incomplete rows.

- Normalization and Scaling: Ensuring that the data falls within a consistent range, often essential for algorithms sensitive to data magnitude.

- Data Encoding: Converting categorical data into numerical values (e.g., one-hot encoding).

- Removing Outliers: Identifying and eliminating outliers that could skew the model’s results.

By cleaning and transforming the data, we ensure that the model training process can proceed smoothly and efficiently.

Feature Engineering

Feature engineering is the process of selecting and transforming input data to create meaningful features that the model can use to improve predictions. Identifying key features from the dataset that significantly contribute to the prediction outcome is crucial to model success.

Examples of feature engineering include:

- Creating new features based on domain knowledge

- Transforming existing features by using logarithmic or polynomial transformations

- Dimensionality reduction techniques like PCA (Principal Component Analysis) to reduce redundant features

Selecting the Right Algorithm

The next crucial step in the process of training machine learning models is choosing the right algorithm. Depending on the task, you can select from various algorithms, such as:

- Classification Algorithms (e.g., Decision Trees, Logistic Regression, Random Forests)

- Regression Algorithms (e.g., Linear Regression, Support Vector Machines)

- Clustering Algorithms (e.g., K-Means, DBSCAN)

The algorithm choice depends on the nature of your data and your problem—whether you are trying to classify data, predict continuous values, or identify patterns. No one algorithm fits all tasks, and sometimes it takes experimentation with multiple methods to find the best one for your dataset.

You can also read: How to Choose the Right AI Tools for Your Business

Splitting Data into Training and Test Sets

Once you’ve prepared your data and chosen an algorithm, the next step is to split your data into training and test sets. This helps you evaluate your model’s performance on unseen data and prevents overfitting. Typically, data is split in an 80/20 or 70/30 ratio, where the majority is used for training and the remainder is reserved for testing.

Another technique that’s commonly used is cross-validation, where the data is divided into several smaller subsets, and the model is trained multiple times, using different subsets for testing each time. This helps in giving a more robust estimate of model performance.