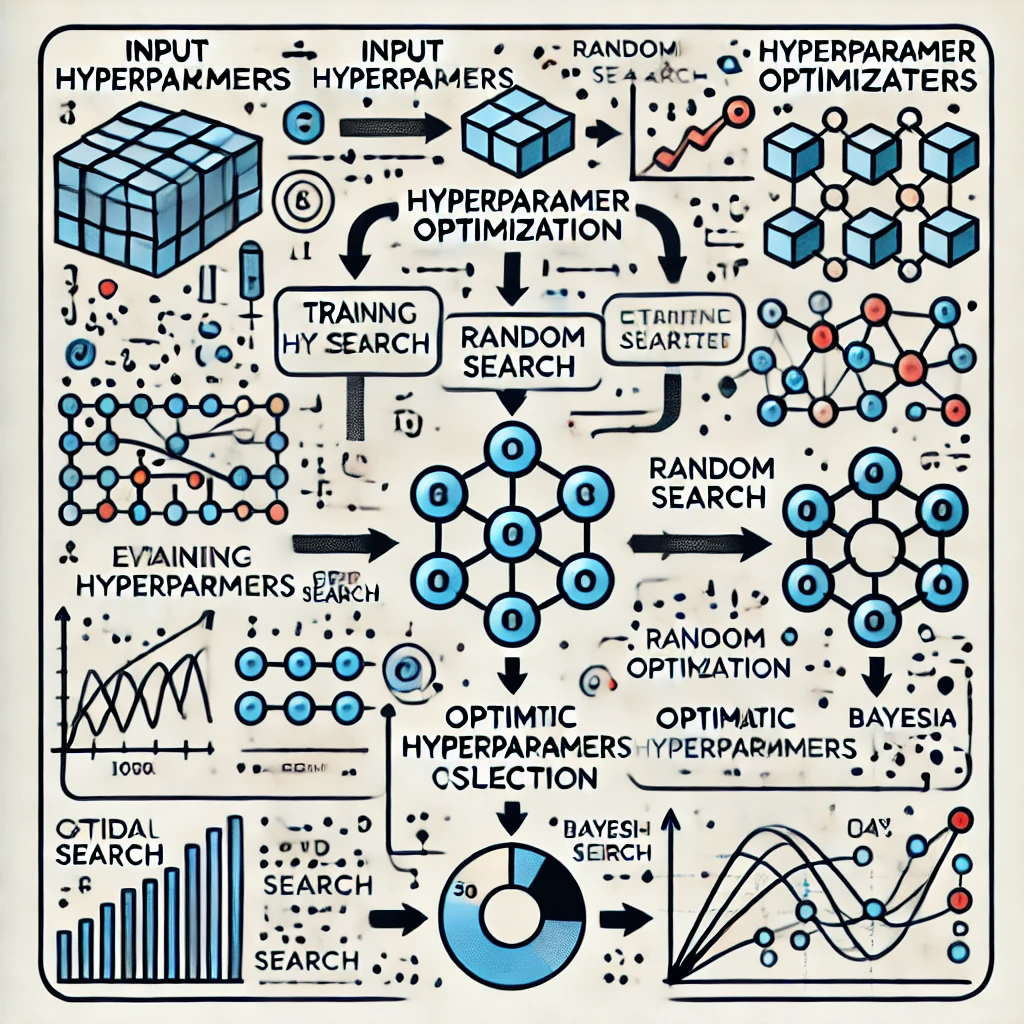

In the fast-evolving world of machine learning, tuning hyperparameters is essential for achieving optimal model performance. Hyperparameter optimization is the process of selecting the best combination of hyperparameters that enable machine learning models to generalize well to new data. This crucial step directly impacts the accuracy, speed, and efficiency of a model, and requires a deep understanding of various optimization techniques. In this article, we will explore several methods to optimize hyperparameters, including grid search, random search, and Bayesian optimization, while shedding light on real-world strategies to enhance your machine learning pipeline.

Introduction to Hyperparameter Optimization

In any machine learning model, hyperparameters control the learning process and determine how well the model learns from the training data. Unlike model parameters, which are learned during training, hyperparameters are set before the learning process begins. For instance, in a neural network, hyperparameters include the learning rate, the number of hidden layers, and the number of units per layer. These settings play a pivotal role in shaping the model’s behavior and performance.

Given their importance, finding the right hyperparameters is challenging. It’s akin to tuning an engine; if any component is slightly off, the entire system could fail to deliver optimal performance. However, optimizing hyperparameters is not just about brute-force testing every possible combination—it requires strategy, time management, and resource efficiency.

What Are Hyperparameters in Machine Learning?

Before delving into the optimization techniques, it’s vital to distinguish between hyperparameters and parameters. Parameters, such as weights in a neural network, are learned from the data during training. Hyperparameters, on the other hand, are manually set before training begins, and they dictate how the model will learn. Examples of hyperparameters include:

- Learning rate

- Batch size

- Number of epochs

- Number of trees in a random forest

- Kernel type in a support vector machine

Selecting inappropriate values for these hyperparameters can lead to overfitting, underfitting, or longer training times, which underscores the importance of hyperparameter optimization.

Why Is Hyperparameter Optimization Important?

Finding the right set of hyperparameters can be the difference between a model that generalizes well on unseen data and one that only performs well on training data but fails on validation or test sets. Poor hyperparameter choices can:

- Cause overfitting or underfitting.

- Lead to inefficient models with slower convergence rates.

- Result in models that perform poorly on unseen data.

Thus, optimizing hyperparameters is essential for achieving a high-performing model. Fortunately, there are several techniques available to aid in this process.

Key Methods to Optimize Hyperparameters in Machine Learning

Grid Search

One of the simplest and most commonly used hyperparameter optimization techniques is Grid Search. This method involves systematically searching through a manually specified subset of hyperparameter space, testing every combination of parameters.

Grid search is exhaustive, which is both its strength and limitation. While it can find the optimal combination, it is computationally expensive, especially when the hyperparameter space is large. The time complexity grows exponentially with the number of hyperparameters, making grid search impractical for models with many hyperparameters or for large datasets.

Grid Search works well when:

- The hyperparameter space is relatively small.

- You are dealing with models where the relationships between hyperparameters are highly non-linear.

However, for models requiring extensive computation and large parameter spaces, other methods like random search or Bayesian optimization are more efficient.

Random Search

While grid search tests every possible combination of hyperparameters, Random Search selects random combinations and evaluates them. Research has shown that random search is often more efficient than grid search, especially when some hyperparameters do not significantly impact model performance.

Random search is beneficial when:

- You have a high-dimensional hyperparameter space.

- You are unsure which hyperparameters have the most significant influence on performance.

Since it doesn’t test every combination, it can cover a larger hyperparameter space in the same amount of time as a grid search, making it a more time-efficient alternative.

Bayesian Optimization

Unlike grid or random search, Bayesian Optimization doesn’t treat the evaluation of hyperparameter configurations as independent. Instead, it builds a probabilistic model of the objective function and uses it to choose the next hyperparameter set to evaluate.

Bayesian optimization focuses on regions of the hyperparameter space that are likely to contain the optimal set of parameters. The idea is to balance exploration (searching new areas) and exploitation (focusing on promising regions). Gaussian Processes (GPs) are commonly used as the underlying model for Bayesian optimization.

This method excels when:

- Each evaluation (e.g., training a model) is expensive.

- The search space is complex with a non-linear response surface.

The key benefit of Bayesian optimization is that it requires fewer evaluations to find a good set of hyperparameters, which makes it highly efficient for expensive machine learning models.

Randomized Grid Search

A hybrid approach, Randomized Grid Search, blends the thoroughness of grid search with the efficiency of random search. Instead of testing every possible combination of hyperparameters, this technique samples from a grid in a randomized manner. It offers a balance between computational cost and thoroughness by focusing only on certain areas of the grid, reducing the number of iterations.

Randomized grid search works well when:

- You are looking for a faster alternative to grid search but with some systematic sampling.

- The model’s hyperparameter space is too large to handle exhaustive grid search.

This method provides a middle ground for users who need efficient sampling but still prefer the grid structure for parameter exploration.

Evolutionary Algorithms

Evolutionary Algorithms are a class of optimization algorithms inspired by the process of natural selection. These algorithms maintain a population of candidate solutions, iteratively improving the population through mutation, crossover, and selection based on fitness.

In the context of hyperparameter optimization, evolutionary algorithms can search large spaces efficiently. They work by evolving a set of hyperparameter combinations, selecting the best performers, and combining them to generate new candidates.

Evolutionary algorithms are suitable for:

- Hyperparameter spaces that are too complex for grid or random search.

- Scenarios where parallelism is required, as these algorithms can evaluate multiple hyperparameter sets simultaneously.

While evolutionary algorithms can provide near-optimal results, they can be computationally intensive and might require more computational resources compared to other techniques.

Common Challenges in Hyperparameter Optimization

Computational Cost

Hyperparameter optimization, particularly when dealing with large datasets or complex models like deep neural networks, can be computationally expensive. Running multiple experiments to evaluate different combinations of hyperparameters might require extensive hardware resources and time, especially for exhaustive search methods like grid search.

Overfitting During Optimization

Optimizing hyperparameters to maximize performance on a validation set can lead to overfitting to the validation set itself. If hyperparameters are too closely tuned to this data, the model may perform well in validation but poorly in real-world applications. Techniques like cross-validation can mitigate this risk by evaluating performance across multiple subsets of the training data.

Dynamic Nature of Model Development

Hyperparameter optimization is often an iterative process. As new data is introduced or the problem evolves, the optimal hyperparameters may change. Keeping the model up-to-date with the best hyperparameters requires frequent re-evaluation and re-optimization.

Best Practices for Hyperparameter Optimization

- Start Simple: Begin with simple optimization techniques like grid or random search before moving to more sophisticated methods like Bayesian optimization.

- Use Cross-Validation: Always validate the performance of different hyperparameter sets across multiple folds to avoid overfitting to a single validation set.

- Set Budget Limits: Given the computational expense of some methods, define clear resource constraints (e.g., number of iterations or time limit) to prevent excessive computational costs.

- Automate the Process: Many machine learning frameworks and libraries offer automated hyperparameter optimization tools (e.g., scikit-learn’s GridSearchCV or RandomizedSearchCV), which can simplify the process.

- Leverage Parallel Computing: If possible, parallelize the search process to evaluate multiple hyperparameter configurations simultaneously, reducing the time required for optimization.